Introduction

Artificial intelligence (AI) is constantly evolving with the potential to revolutionize many aspects of our lives. However, alongside the exciting opportunities it presents, AI also introduces new challenges, particularly in the realm of cybersecurity.

At Futurism Technologies, we believe in a comprehensive approach to cybersecurity that goes beyond traditional methods. This guide delves into the intricate relationship between AI and cybersecurity, exploring the challenges, secure development lifecycles, and best practices to safeguard your AI solutions and systems from coming-of-age cyber threats and attack vectors. Join us on this journey as we explore the future, where AI and cybersecurity converge, and innovation thrives alongside protection.

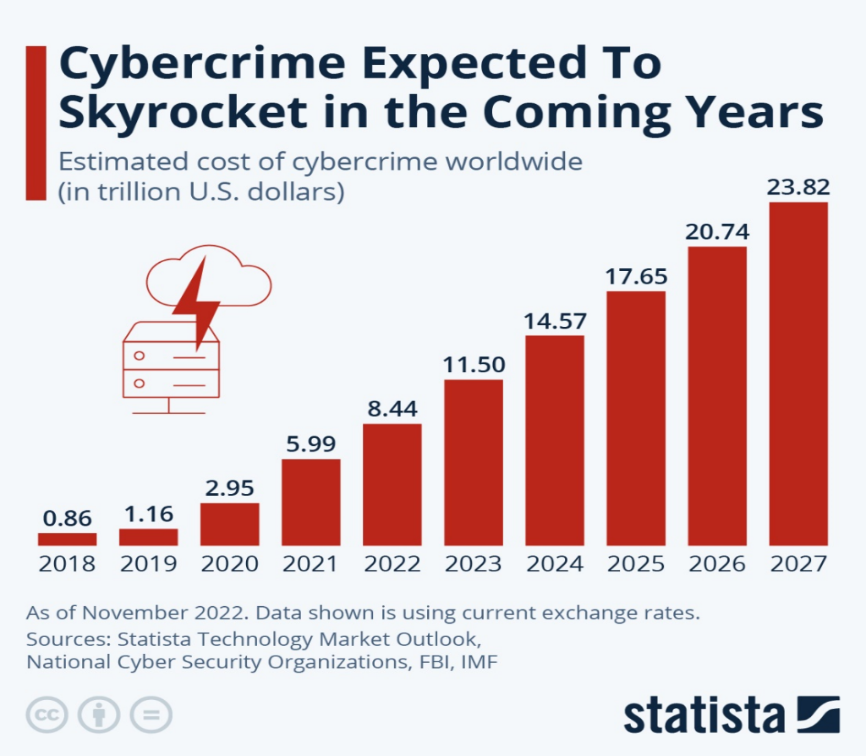

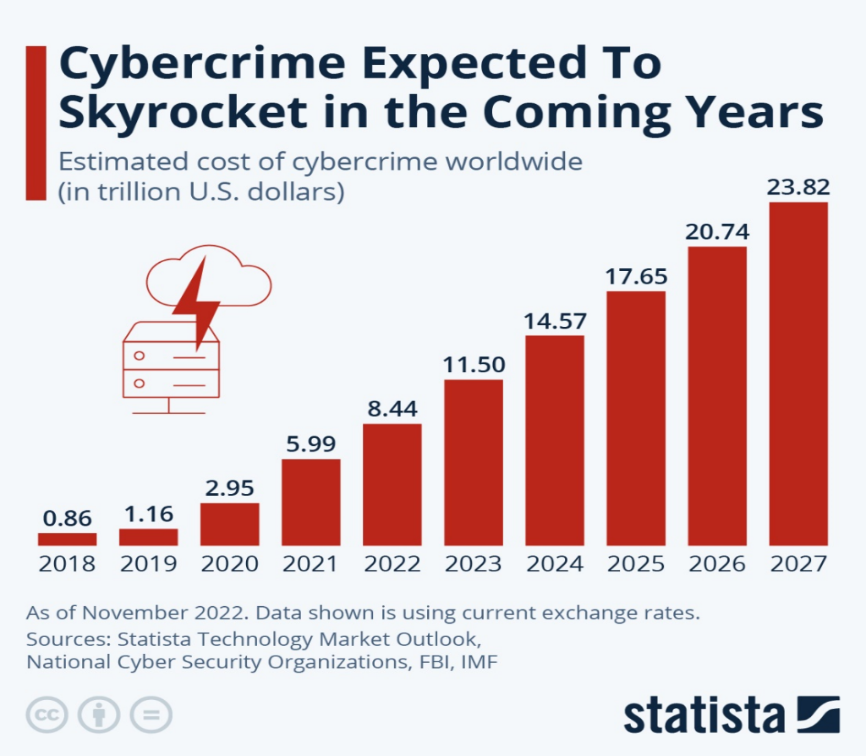

By 2025, cybercrime could cost the world a jaw-dropping $17.65 trillion!

(Source: Statista)

Understanding Unique Security Challenges of AI

The rise of artificial intelligence (AI) and machine learning (ML) presents exciting possibilities, but also introduces new security concerns. Unlike traditional IT systems, AI algorithms are vulnerable to adversarial attacks. In these attacks, malicious actors manipulate the data used to train the AI, or the algorithms themselves, to cause the system to produce incorrect or harmful outputs.

Securing AI goes beyond traditional methods like firewalls and intrusion detection. We need protection against threats that exploit weaknesses within the algorithms themselves. These attacks can be subtle and difficult to detect, potentially altering the system's decisions and compromising its effectiveness.

To address these challenges, a multi-layered approach is necessary. First, we need a deep understanding of the specific vulnerabilities present in the AI model. This involves analyzing the training data, the algorithm itself, and potential attack vectors. Second, we need to deploy specialized defenses like adversarial training to make the model more resistant to manipulation. Additionally, advanced methods can help us understand and audit the model's decision-making process, providing valuable insights for identifying and mitigating potential risks. By acknowledging these unique security challenges and taking proactive measures, we can ensure the responsible and secure deployment of these powerful AI systems.

Expanded Understanding of AI Security Challenges

Rising Statistics: A Global Concern

Recent research by Cybersecurity Ventures predicts that cybercrime will cost the world $10.5 trillion annually by 2025, a significant increase from $3 trillion in 2015. This stark rise underscores the expanding threat landscape, particularly as AI and ML technologies become more integrated into critical systems. AI-driven attacks are becoming more sophisticated, with adversaries leveraging AI for developing malware that can evade detection, automate social engineering attacks, and optimize breach strategies.

Use-Cases Highlighting AI Vulnerabilities

- Deepfake Technology: Deepfakes have emerged as a potent tool for misinformation, leveraging AI to create highly convincing fake videos and audio recordings. This technology poses significant threats in various domains, including politics, where it can be used to manipulate elections, and cybersecurity, where it can trick biometric security mechanisms.

- AI-Powered Phishing: Cybercriminals are using AI to craft highly personalized phishing emails at scale, which are more difficult to distinguish from legitimate communications. These messages often exploit social engineering techniques, tailored to individual vulnerabilities, making them significantly more effective.

- Adversarial Machine Learning (AML): In adversarial attacks, attackers input specially crafted data into AI models to manipulate their output, compromising their integrity. For instance, subtle alterations to input images can trick an AI-powered surveillance system into misidentifying objects or individuals, posing serious security implications.

Examples of AI Security Breaches

- Twitter Bot Influence: AI-driven bots have been used to amplify misinformation on social media platforms. For example, during significant political events, these bots have spread fake news, influencing public opinion, and potentially impacting election outcomes.

- Autonomous Vehicle Hacking: Researchers have demonstrated that slight alterations to road signs, imperceptible to the human eye, can cause AI-driven autonomous vehicles to misinterpret the signs, leading to potential accidents. This vulnerability exposes the critical need for robust AI security in emerging transportation technologies.

- Healthcare Data Breaches: AI systems in healthcare, used for patient data analysis and predictive modeling, have become targets for cyberattacks. The breaches not only compromise sensitive patient information but also undermine the trust in AI systems designed to enhance patient care.

Enhancing AI Security: A Forward-Looking Approach

Addressing these unique security challenges requires a holistic strategy that encompasses not only the technical defenses but also a broader understanding of AI's societal impacts. Efforts such as incorporating adversarial training, enhancing transparency through explainable AI, and fostering a security-first culture within AI development teams are essential steps forward. Moreover, regulatory frameworks and ethical guidelines will play a pivotal role in ensuring AI systems are designed, deployed, and maintained with security and privacy at their core.

By acknowledging these challenges and proactively seeking solutions, we can leverage AI's potential while safeguarding against the sophisticated cyber threats that accompany technological advancements.

Building Secure AI: A Lifecycle Approach

Developing trustworthy AI systems requires a comprehensive approach to security, starting from the very beginning. Here's a breakdown of the key stages in secure AI development lifecycle:

- Designing for Security: Just like building a house, securing an AI system starts with a solid foundation. This stage involves brainstorming potential threats that AI systems are uniquely vulnerable to, compared to traditional software. It's like thinking about the different ways someone could break into your house, but for AI systems, we're considering things like data leaks, manipulating the model itself, and even ethical concerns. We also need to make sure the AI system follows the rules and regulations in place, just like ensuring your house meets building codes.

- Building with Security in Mind: Once the blueprint is ready, construction begins. In the development phase, security is woven into the very fabric of the AI system. This involves practices like double-checking the code for vulnerabilities, similar to inspecting the quality of materials used in construction. Additionally, just like stress-testing a building to see how it handles pressure, we perform VAPT (Vulnerability Assessment and Penetration Testing) to see if the system can withstand potential attacks. Finally, just like using reliable suppliers to build your house, we need to ensure the security of everything that contributes to the AI system, from the software libraries to the data used to train it.

- Deploying Securely Moving the AI system from the building site to your actual house comes with new security considerations. We need to protect both the physical servers where the AI runs and the AI model itself. This involves setting identity and access management solutions for strong security controls, like using complex passwords and secure communication channels, similar to installing locks and alarms in your house. But security goes beyond technical safeguards; we also need to make sure the AI system is used responsibly and ethically, just like ensuring you use your house in a way that respects your neighbors.

- Keeping it Secure and Up-to-Date: Just like maintaining your house requires constant vigilance, keeping an AI system secure is an ongoing process. We need to continuously monitor the system for any suspicious activity, similar to having a security system that alerts you of potential break-ins. Additionally, like regularly updating your home security system, we need to keep the AI system updated with the latest security patches and adapt it to new threats and regulations that may emerge using advanced threat protection solutions. By staying proactive, we can ensure our AI systems remain resilient and adaptable over time.

This lifecycle approach highlights the importance of weaving security throughout the entire development process, from initial design to ongoing maintenance, to ensure trustworthy and reliable AI systems.

Building a Secure Foundation for AI

Securing AI systems effectively involves embracing a set of key principles and practices:

- Transparency and Accountability: AI shouldn't be a black box. People should be able to understand how the system works and makes decisions, especially when these decisions impact the public or have significant consequences. This transparency is crucial for holding the system accountable.

- Security by Design: Security shouldn't be an afterthought bolted onto the finished product. It should be woven into the fabric of the AI system from the very beginning, from the initial design phase all the way through to deployment and ongoing maintenance.

- Owning Your Security: Organizations have a responsibility to ensure the security of their AI systems. This ownership goes beyond the technical team and extends to leadership, making security a core value throughout the organization.

- Building a Security-Minded Team: Effective AI security requires the right team structure. This means having dedicated security experts focused on AI, clear channels for reporting security concerns, and consistent training for all staff on the latest security best practices.

Importance of Secure AI Guidelines

As AI becomes increasingly prevalent in our lives, prioritizing its security becomes paramount. This extends beyond safeguarding data and computer systems; it's about fostering trust in the technology itself. Following these guidelines is crucial for companies as they strive to ensure robust and reliable AI systems that inspire confidence in users and stakeholders alike. By implementing robust security measures, we not only shield critical information but also enhance the dependability of AI systems, ultimately fostering trust in this powerful technology.

The Critical Role of Guidelines in Evolving Threat Landscapes

According to the Global Risk Report 2023 by the World Economic Forum, cyberattacks rank among the top global risks by likelihood and impact over the next decade. As AI technologies become increasingly sophisticated, the potential for AI-specific threats grows, emphasizing the need for comprehensive security guidelines. The AI security market itself is projected to reach $38.2 billion by 2026, reflecting the growing investment in securing AI systems against evolving threats.

Use-Cases Demonstrating the Need for Guidelines

- Financial Sector AI Applications: In the finance industry, AI is used for fraud detection, algorithmic trading, and customer service chatbots. The misuse of AI in this sector could lead to massive financial losses and erode trust in financial institutions. Implementing secure AI guidelines ensures that AI applications in finance are robust against attacks, safeguarding sensitive financial data and maintaining the integrity of financial markets.

- AI in Autonomous Systems: Whether in self-driving cars or unmanned drones, AI systems control critical decisions that can affect human lives. Security vulnerabilities could lead to disastrous consequences. Secure AI guidelines are vital for ensuring that autonomous systems operate safely and predictably under all conditions, protecting users from harm due to security lapses.

Examples Highlighting the Importance of Secure AI Guidelines

- AI in Healthcare Misdiagnosis: An AI system designed for diagnosing diseases, if manipulated due to inadequate security measures, could lead to incorrect treatments. For instance, adversarial attacks on AI imaging tools can alter cancer screening results, putting patients' lives at risk. Secure AI guidelines ensure these systems are tested against such manipulations, maintaining accuracy and trust in AI-assisted healthcare.

- Social Media Manipulation: AI-driven algorithms control what content is shown to users on social media platforms. Without strict security and ethical guidelines, these systems can be exploited to spread misinformation or biased content, influencing public opinion and endangering democratic processes. Secure AI guidelines help in creating safeguards against the misuse of AI in manipulating content dissemination.

The Way Forward: Adopting and Implementing AI Security Guidelines

- Establishing Global Standards: The establishment of global standards for AI security is imperative. Organizations have begun to develop ethical guidelines for AI and autonomous systems, emphasizing the importance of transparency, accountability, and privacy. Adopting these guidelines helps ensure that AI technologies are developed and used responsibly, fostering trust among users and stakeholders.

- Collaborative Efforts for Enhancing AI Security: Collaborative initiatives between governments, industry, and academia are crucial for advancing AI security standards. These partnerships facilitate the sharing of best practices, threat intelligence, and research on new security methodologies, ensuring that AI systems remain resilient against sophisticated cyber threats.

- Continuous Education and Awareness: Educating developers, users, and policymakers about the importance of AI security is essential. Ongoing training and awareness programs can empower stakeholders to recognize and mitigate risks associated with AI systems, ensuring that security considerations are integrated throughout the AI lifecycle.

Conclusion

Creating secure AI systems is a challenging yet essential task. As AI increasingly integrates into diverse sectors, the need for robust security protocols grows more critical. Adhering to these guidelines enables organizations to reduce risks and fully leverage AI's capabilities, guaranteeing a secure and thriving digital landscape.

Futurism Technologies, an expert in AI security, safeguards your AI systems against evolving cyber threats. We offer comprehensive digital product engineering solutions, seamlessly integrating robust security throughout the entire AI lifecycle, from design and deployment to ongoing maintenance. Our skilled AI and cybersecurity team prioritizes both security and ethical compliance, ensuring your AI operates reliably and responsibly, fostering trust in the future of AI.